You must have seen a lot of AI bots on social media and ecommerce websites and news blogs and now it is getting harder to differentiate between bots and humans. The researchers from University of Notre Dame conducted a study where they made AI bots using LLMs and asked the human and AI bots participants in the research to talk about politics on a customized social networking platform called Mastodon. This experiment was conducted 3 times and each time lasted for four days. After the experiment, human participants were asked to identify the AI bots and humans in the political discussions.

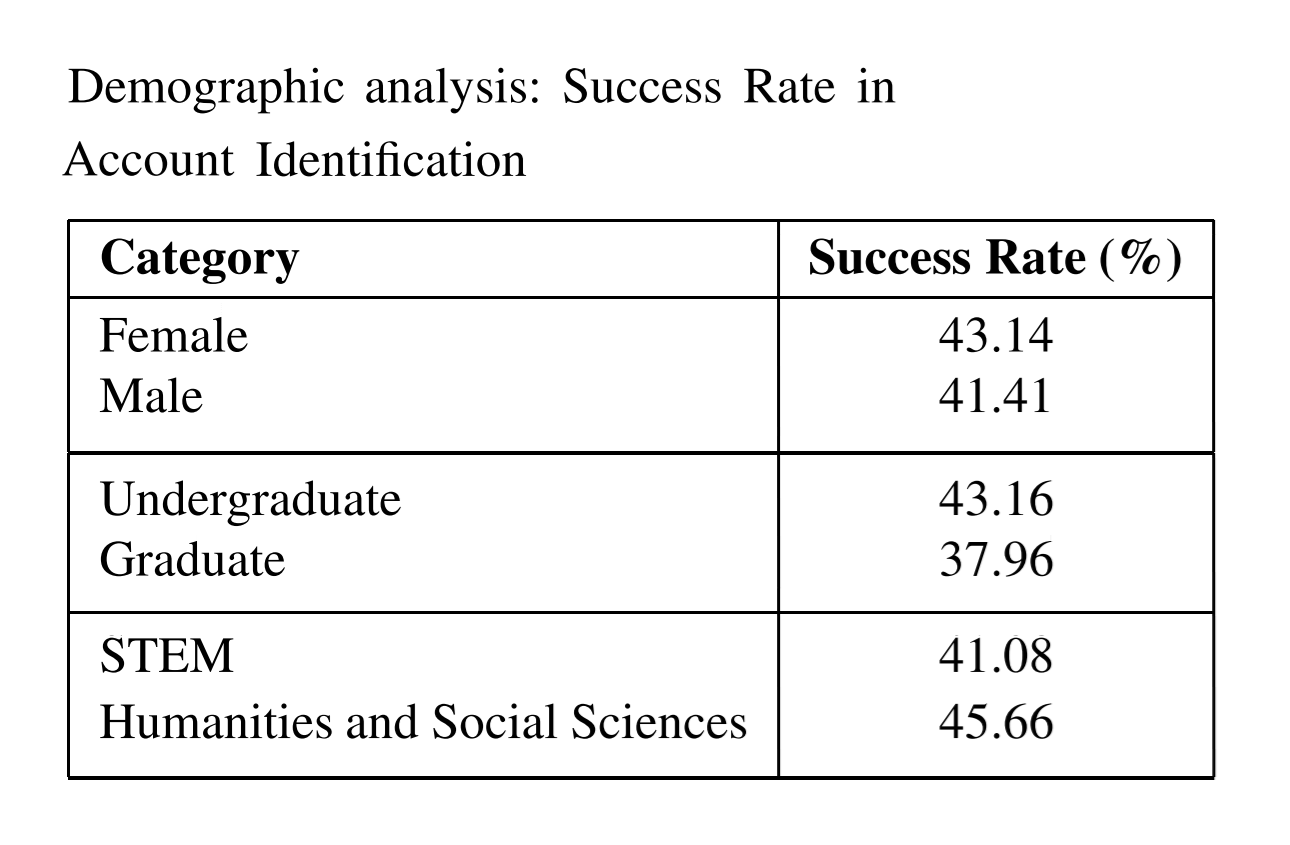

The results were shocking as human participants answered wrong 58% of the time, while "participants correctly identified the nature of other users in the experiment only 42% of the time". Paul Brenner, who was a member of this research said that the people who were asked to identify humans knew beforehand that there were AI bots involved too. But still, they couldn’t answer correctly even half of the time. If a human says something in an online discussion, its impact is stronger as compared to an AI saying something. But if AI bots are deceiving humans this much, they will be able to easily spread misinformation on the internet without anyone detecting anything.

The AI bots that were used in this study were of three types: Chat-GPT, LLama-2 and Claude-2. These AI bots were also given some personas to interact with people like realistic, sensible, biased, etc. These bots were asked to comment about politics according to their personas and to also add some personal experiences. After the study, researchers noted that the results had nothing to do with which platforms they were associated with and what their personas were. Brenner said that we had predicted that AI bots from LLama-2 would be weaker and easily identifiable because it is a small model and it cannot answer deep and sensible questions very well. But on social media, it had no effect and the impact of it can be easily accessible misinformation. The successful and least detected personas were disguised as females talking about politics with critical knowledge and strategic thinking.

Although there are many accounts on social media where AI chatbots are operating with human assistance, the LLM based bots are cheaper and faster to use. If we want to stop AI bots to spread misinformation, we will need many legislatures and social media account verifications.

Read next: Google's Popularity Among SEO Experts Declines

The results were shocking as human participants answered wrong 58% of the time, while "participants correctly identified the nature of other users in the experiment only 42% of the time". Paul Brenner, who was a member of this research said that the people who were asked to identify humans knew beforehand that there were AI bots involved too. But still, they couldn’t answer correctly even half of the time. If a human says something in an online discussion, its impact is stronger as compared to an AI saying something. But if AI bots are deceiving humans this much, they will be able to easily spread misinformation on the internet without anyone detecting anything.

The AI bots that were used in this study were of three types: Chat-GPT, LLama-2 and Claude-2. These AI bots were also given some personas to interact with people like realistic, sensible, biased, etc. These bots were asked to comment about politics according to their personas and to also add some personal experiences. After the study, researchers noted that the results had nothing to do with which platforms they were associated with and what their personas were. Brenner said that we had predicted that AI bots from LLama-2 would be weaker and easily identifiable because it is a small model and it cannot answer deep and sensible questions very well. But on social media, it had no effect and the impact of it can be easily accessible misinformation. The successful and least detected personas were disguised as females talking about politics with critical knowledge and strategic thinking.

Although there are many accounts on social media where AI chatbots are operating with human assistance, the LLM based bots are cheaper and faster to use. If we want to stop AI bots to spread misinformation, we will need many legislatures and social media account verifications.

Read next: Google's Popularity Among SEO Experts Declines