Ever since ChatGPT made it far easier to create written content than might have been the case otherwise, a slew of AI content detectors have popped up. Many have begun using these detectors because of the fact that this is the sort of thing that could potentially end up allowing them to spot AI generated content. Even so, how effective are these content detectors? A study conducted by the Department of Computer Science at Southern Methodist University tried to come up with answers.

Researchers analyzed Claude, Bard as well as ChatGPT in order to ascertain which of them were easier to detect. With all of that having been said and now out of the way, it is important to note that Claude actually provided content that evaded detection for the most part. As for ChatGPT and Bard, they were better at detecting their own content, but they weren’t quite as good as Claude when it came to avoiding detection by third party tools.

The manner in which AI content detectors function is that they look for artifacts, or in other words, signs that a piece of content was made using large language models. Each LLM comes with its own unique set of artifacts, all of which can contribute to them becoming more or less challenging to pinpoint with all things having been considered and taken into account.

The way this study was conducted involved generating a 250 word piece of content for around 50 topics or so. The three AI models that were being analyzed were then asked to paraphrase this content, and fifty human generated essays were also factored into the equation.

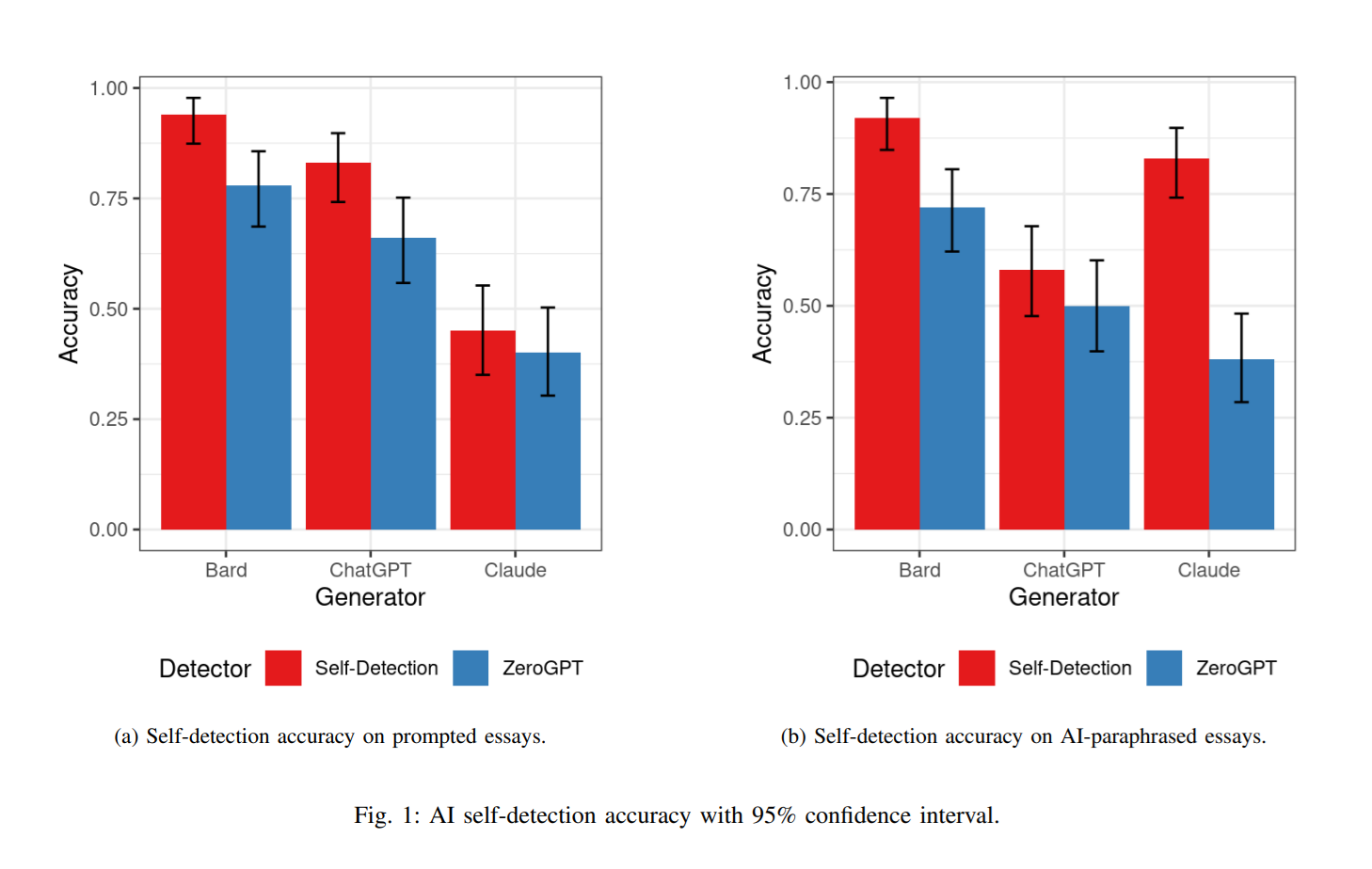

Zero shot prompting was then utilized for the purposes of self detection by these AI models. Bard had the highest level of accuracy when detecting its own content, followed by ChatGPT and Claude in dead last.

As for ZeroGPT, an AI content detector offered by Open AI, it detected Bard content around 75% of the time. It was slightly less effective at detecting GPT generated content, and Claude managed to trick it into believing the content wasn’t AI generated the most times out of all the models.

One thing that must be mentioned here is that ChatGPT’s self detection hovered at around 50%. This seems to suggest that it has the same accuracy rate as guessing, which was considered to be a failure in the context of this study. The self detection of paraphrased content yielded even more interesting results. Claude registered a much higher self detection score, and it also had the lowest accuracy score when being detected by ZeroGPT.

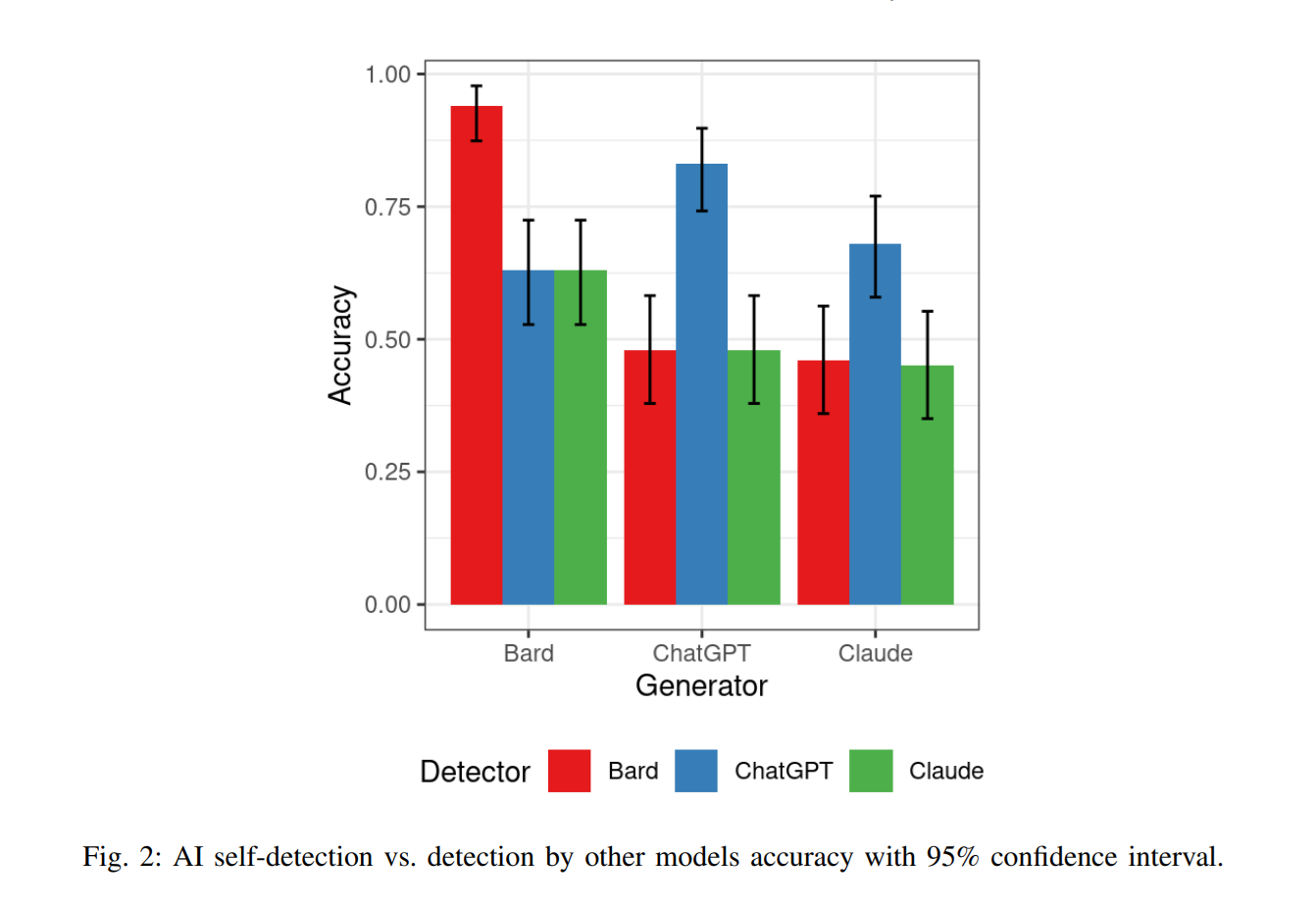

In the end, researchers concluded that ChatGPT is able to detect content that it was used to generate, but it appears to be less effective at registering paraphrased content. Bard was able to perform reasonably well in both cases, but each of these models were far outstripped by Claude.

Claude was able to outsmart not only other AI models, but also its own detection. This seems to suggest that it had the fewest artifacts that could be used to determine the origin of is content. More study will be needed to obtain further evidence, but the signs are pointing to Claude being the most reliable text generator of all.

Read next: AI Systems Are Facing Increasing Threats According to This Report

Researchers analyzed Claude, Bard as well as ChatGPT in order to ascertain which of them were easier to detect. With all of that having been said and now out of the way, it is important to note that Claude actually provided content that evaded detection for the most part. As for ChatGPT and Bard, they were better at detecting their own content, but they weren’t quite as good as Claude when it came to avoiding detection by third party tools.

The manner in which AI content detectors function is that they look for artifacts, or in other words, signs that a piece of content was made using large language models. Each LLM comes with its own unique set of artifacts, all of which can contribute to them becoming more or less challenging to pinpoint with all things having been considered and taken into account.

The way this study was conducted involved generating a 250 word piece of content for around 50 topics or so. The three AI models that were being analyzed were then asked to paraphrase this content, and fifty human generated essays were also factored into the equation.

Zero shot prompting was then utilized for the purposes of self detection by these AI models. Bard had the highest level of accuracy when detecting its own content, followed by ChatGPT and Claude in dead last.

As for ZeroGPT, an AI content detector offered by Open AI, it detected Bard content around 75% of the time. It was slightly less effective at detecting GPT generated content, and Claude managed to trick it into believing the content wasn’t AI generated the most times out of all the models.

One thing that must be mentioned here is that ChatGPT’s self detection hovered at around 50%. This seems to suggest that it has the same accuracy rate as guessing, which was considered to be a failure in the context of this study. The self detection of paraphrased content yielded even more interesting results. Claude registered a much higher self detection score, and it also had the lowest accuracy score when being detected by ZeroGPT.

In the end, researchers concluded that ChatGPT is able to detect content that it was used to generate, but it appears to be less effective at registering paraphrased content. Bard was able to perform reasonably well in both cases, but each of these models were far outstripped by Claude.

Claude was able to outsmart not only other AI models, but also its own detection. This seems to suggest that it had the fewest artifacts that could be used to determine the origin of is content. More study will be needed to obtain further evidence, but the signs are pointing to Claude being the most reliable text generator of all.

Read next: AI Systems Are Facing Increasing Threats According to This Report

Interesting analysis! As AI detectors continue to evolve, tools like humanize.im offer a practical approach for those looking to add human-like nuances to AI-generated text, helping content appear more authentic and potentially bypass detection.

ReplyDelete