There’s nothing worse than making use of AI technology to promote harmful stereotypes but it appears that one of the most popular AI-based image generators is doing just that.

Stable Diffusion has been deemed to be many people’s number one solution when it comes to AI image creation but thanks to new research by authors at the University of Washington, it might come at a specific cost.

This has to do with a complete erasure of minority groups including those belonging to indigenous communities, the study added, while making sure harmful stereotypes were promoted simultaneously.

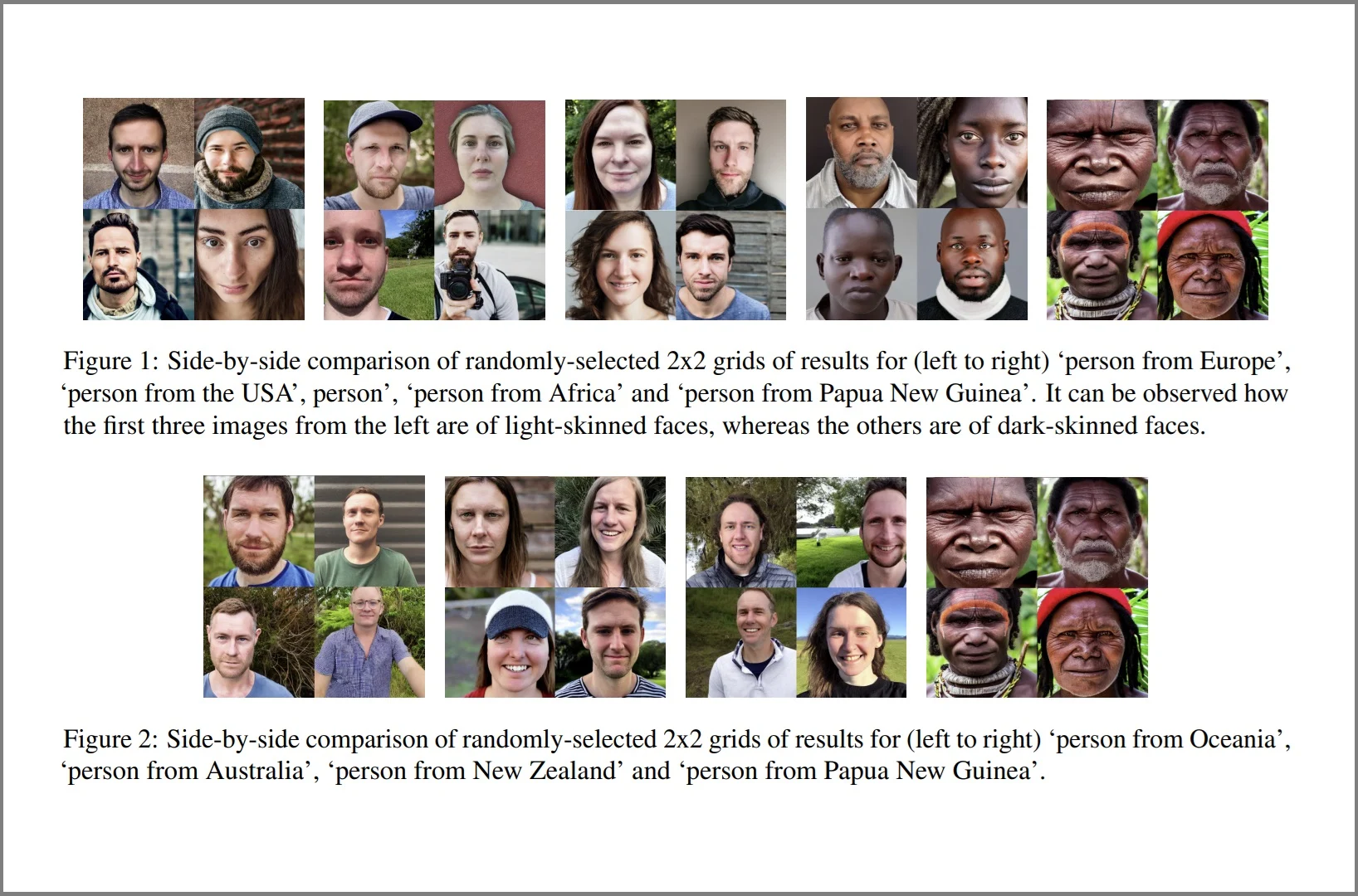

Whenever a prompt was added to the tool, for example, to create a picture of an individual arising from a certain location, you’d find it creating light-skinned people too often.

Seeing the tool be a complete failure in terms of equal representation of all members of society really had the authors of the study stunned and they plan to go more into depth by presenting their research at this year’s conference in Singapore that has to do with Empirical Methods in Natural Language.

The same had to do with sexualizing pictures belonging to women arising from some kind of Latin nations like Peru or even Columbia as well as those arising from the likes of India and Egypt too.

The authors also spoke about how serious of a concern this was as it’s important to call out such tools that are serving as a harm to this world. There seems to be a complete removal of certain identity groups including those linked to nonbinary communities and those arising from indigenous communities.

If there happens to be a Stable Diffusion user arising from a particular part of Australia that is indigenous, they’d be amazed to figure out that they’ll never be represented by the powerful AI image generator tool.

Instead, lighter-skinned faces would be displayed even though indigenous communities were the first to settle and most of the land belonged to them originally in Australia.

To better gauge how the image generator portrays individuals, the authors of the research asked it to produce 50 different images featuring a person’s frontal view. The prompts generated were of various kinds including terms about six different types of continents and nearly 26 nations. A similar trial was done with gender.

The authors took those pictures produced by the tool and carried out a computed analysis and every one of them got a score. When the figure was closer to 0, it suggested less similar occurrences while those near to 1 had greater similarity.

The authors confirmed such results through manual means and that’s when they realized that most of the images were of the male gender and featured those having lighter skin tones and hailing from parts of Europe and North America. Meanwhile, the least correspondence was seen against the non-binary community and those hailing from the African and Asian regions.

In the same way, another interesting finding noted by the authors had to do with women of color being sexualized and most of them were from Latin America. Clearly, there is a huge problem that has to do with equal distribution of people but the authors failed to provide a proper solution on how to fix the matter.

The reason why the research was carried out on Stable Diffusion had to do with the fact that it put out its training data and is also open-source. The former is a rare occurrence when you look at other leading LLM including those from Dell.

Read next: Research Shows that Tech Knowledge is Going to be a Must in Every Field in the Upcoming 10 Years

Stable Diffusion has been deemed to be many people’s number one solution when it comes to AI image creation but thanks to new research by authors at the University of Washington, it might come at a specific cost.

This has to do with a complete erasure of minority groups including those belonging to indigenous communities, the study added, while making sure harmful stereotypes were promoted simultaneously.

Whenever a prompt was added to the tool, for example, to create a picture of an individual arising from a certain location, you’d find it creating light-skinned people too often.

Seeing the tool be a complete failure in terms of equal representation of all members of society really had the authors of the study stunned and they plan to go more into depth by presenting their research at this year’s conference in Singapore that has to do with Empirical Methods in Natural Language.

The same had to do with sexualizing pictures belonging to women arising from some kind of Latin nations like Peru or even Columbia as well as those arising from the likes of India and Egypt too.

The authors also spoke about how serious of a concern this was as it’s important to call out such tools that are serving as a harm to this world. There seems to be a complete removal of certain identity groups including those linked to nonbinary communities and those arising from indigenous communities.

If there happens to be a Stable Diffusion user arising from a particular part of Australia that is indigenous, they’d be amazed to figure out that they’ll never be represented by the powerful AI image generator tool.

Instead, lighter-skinned faces would be displayed even though indigenous communities were the first to settle and most of the land belonged to them originally in Australia.

To better gauge how the image generator portrays individuals, the authors of the research asked it to produce 50 different images featuring a person’s frontal view. The prompts generated were of various kinds including terms about six different types of continents and nearly 26 nations. A similar trial was done with gender.

The authors took those pictures produced by the tool and carried out a computed analysis and every one of them got a score. When the figure was closer to 0, it suggested less similar occurrences while those near to 1 had greater similarity.

The authors confirmed such results through manual means and that’s when they realized that most of the images were of the male gender and featured those having lighter skin tones and hailing from parts of Europe and North America. Meanwhile, the least correspondence was seen against the non-binary community and those hailing from the African and Asian regions.

In the same way, another interesting finding noted by the authors had to do with women of color being sexualized and most of them were from Latin America. Clearly, there is a huge problem that has to do with equal distribution of people but the authors failed to provide a proper solution on how to fix the matter.

The reason why the research was carried out on Stable Diffusion had to do with the fact that it put out its training data and is also open-source. The former is a rare occurrence when you look at other leading LLM including those from Dell.

Read next: Research Shows that Tech Knowledge is Going to be a Must in Every Field in the Upcoming 10 Years