Google has just rolled out its latest AI offering dubbed Gemini Pro. The company has made some major promises during the grand unveiling. But it appears that not all that glitters might be gold in the case of the ChatGPT archrival.

It’s been less than a month since we saw the search engine giant come forward with the demo video that had people talking. But the end result was that the Android maker was under intense criticism for what seemed to be a staged ordeal amongst the presenter and the world of AI as outlined by the latest controversy on the subject.

Now, as per a new study, we are seeing some shocking unveilings including how the chatbot that Google says is the most powerful one up for grabs to users is actually far from ideal. In fact, its performance is being compared to that of OpenAI’s outdated GPT 3.5 variant and the results are far worse.

Yes, that means the most powerful and latest LLM from Google fails to impress big time despite taking months to enter the market, only to fall short of experts’ expectations. Remember, ChatGPT’s 3.5 version is not only old, and less innovative, but up for free. Users paying a subscription fee can attain access to GPT-4 and 4V LLMs and the majority of users are making the most of the latest OpenAI alternative.

This research carried out by authors at Carnegie Mellon University went on to prove how several tasks were carried out by the model including writing to come to this understanding. Interestingly, it’s shocking how such pieces of writing were outlined to be inferior in quality when compared to others generated by similar tools from competitors.

This kind of conclusion that features some massive shocks is bound to hurt the leadership of Google which has not only spent time but so much money to market the Gemini Pro Model as the next best thing in the world of AI. It was not only comparable but inferior in terms of accuracy when compared to the recent version of OpenAI.

When Google was contacted to shed light on the matter and what it thought about the findings, there were some interesting facts unveiled by the spokesperson. He went into detail about Google’s own studies which proved that the opposite was true. Not only was Gemini Pro better than GPT 3.5 but its Gemini Ultra which is yet to be released is said to be scoring higher points than OpenAI’s best AI offering so far, GPT-4.

You can read more about the technical paper and what Google’s internal team has to say about it but from what we can make of this scenario so far, it’s definitely alarming news. Of course, more studies by other external entities are needed to really come to the bottom of this and draw further conclusions as to how great Gemini’s Pro Model is. For now, Google has refuted the claims made by the study and vows to stick to its internal research. They’ve also accused the author of using poor benchmarks for comparison and conflicts arising due to data contamination too.

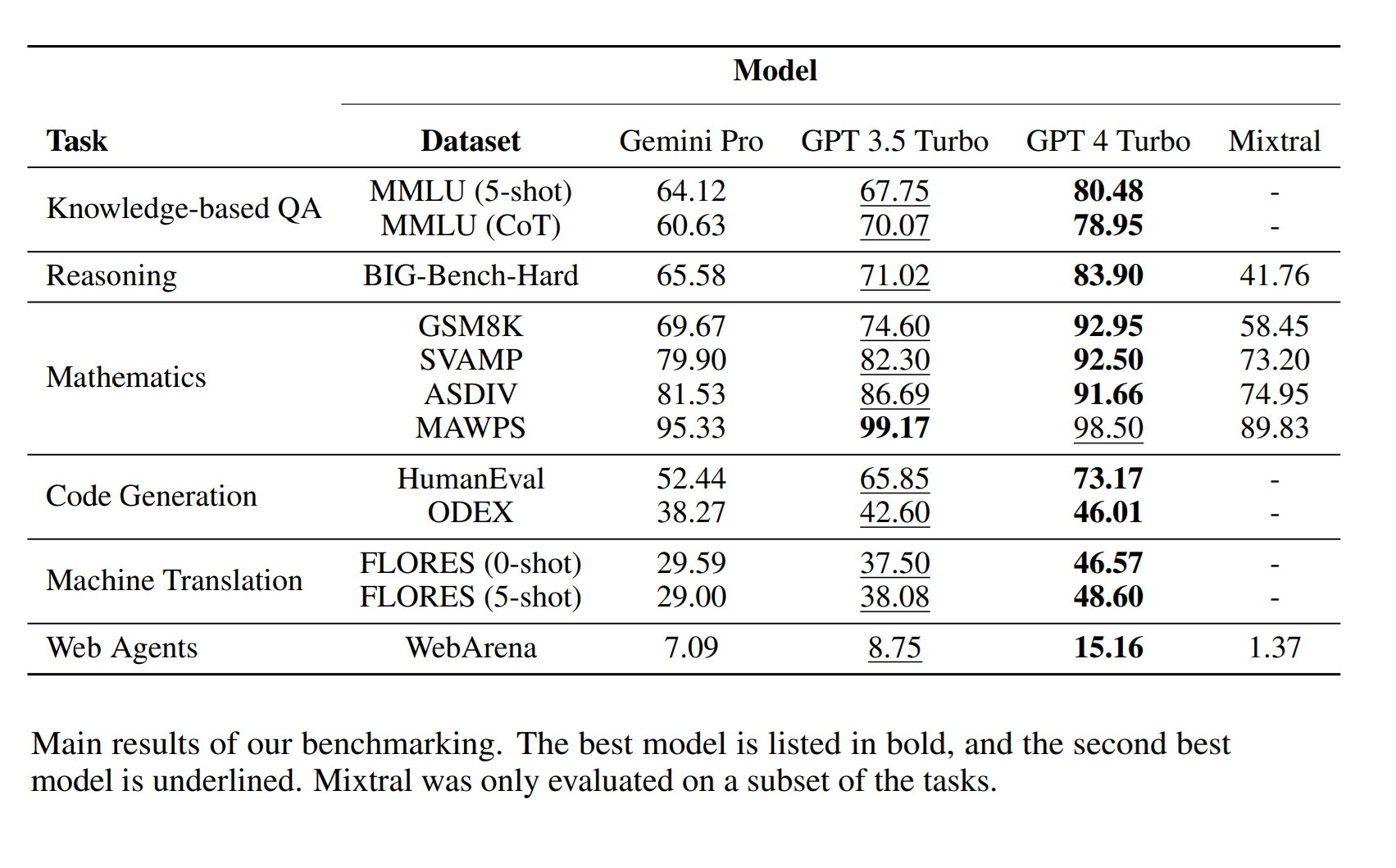

The researchers were believed to have tested four kinds of large language models including Gemini Pro, GPT-3.5 Turbo, Mixtral 8X7B, and GPT-4 Turbo from OpenAI. They then made use of a LiteLLM over 4 days and then ran the models across various prompts. Furthermore, it was shocking to see how the programming fell short of expectations too.

The accuracy levels were poor in comparison to others and with MCQs, it opted to give out more D answers, despite it being incorrect, depicting bias.

Now the question is what does this really mean for tech giant Google? It’s definitely a massive blow to the company. They’ve been trying to get a head start in the AI race but one thing or the other just brings them down. Meanwhile, the most innovative and powerful variant dubbed Gemini Ultra will be launched next year. So that means the tech giant is lagging behind in the AI race, as far as performance is concerned.

Read next: College Degrees May Lose Their Worth Soon In this Era of AI

It’s been less than a month since we saw the search engine giant come forward with the demo video that had people talking. But the end result was that the Android maker was under intense criticism for what seemed to be a staged ordeal amongst the presenter and the world of AI as outlined by the latest controversy on the subject.

Now, as per a new study, we are seeing some shocking unveilings including how the chatbot that Google says is the most powerful one up for grabs to users is actually far from ideal. In fact, its performance is being compared to that of OpenAI’s outdated GPT 3.5 variant and the results are far worse.

Yes, that means the most powerful and latest LLM from Google fails to impress big time despite taking months to enter the market, only to fall short of experts’ expectations. Remember, ChatGPT’s 3.5 version is not only old, and less innovative, but up for free. Users paying a subscription fee can attain access to GPT-4 and 4V LLMs and the majority of users are making the most of the latest OpenAI alternative.

This research carried out by authors at Carnegie Mellon University went on to prove how several tasks were carried out by the model including writing to come to this understanding. Interestingly, it’s shocking how such pieces of writing were outlined to be inferior in quality when compared to others generated by similar tools from competitors.

This kind of conclusion that features some massive shocks is bound to hurt the leadership of Google which has not only spent time but so much money to market the Gemini Pro Model as the next best thing in the world of AI. It was not only comparable but inferior in terms of accuracy when compared to the recent version of OpenAI.

When Google was contacted to shed light on the matter and what it thought about the findings, there were some interesting facts unveiled by the spokesperson. He went into detail about Google’s own studies which proved that the opposite was true. Not only was Gemini Pro better than GPT 3.5 but its Gemini Ultra which is yet to be released is said to be scoring higher points than OpenAI’s best AI offering so far, GPT-4.

You can read more about the technical paper and what Google’s internal team has to say about it but from what we can make of this scenario so far, it’s definitely alarming news. Of course, more studies by other external entities are needed to really come to the bottom of this and draw further conclusions as to how great Gemini’s Pro Model is. For now, Google has refuted the claims made by the study and vows to stick to its internal research. They’ve also accused the author of using poor benchmarks for comparison and conflicts arising due to data contamination too.

The researchers were believed to have tested four kinds of large language models including Gemini Pro, GPT-3.5 Turbo, Mixtral 8X7B, and GPT-4 Turbo from OpenAI. They then made use of a LiteLLM over 4 days and then ran the models across various prompts. Furthermore, it was shocking to see how the programming fell short of expectations too.

The accuracy levels were poor in comparison to others and with MCQs, it opted to give out more D answers, despite it being incorrect, depicting bias.

Now the question is what does this really mean for tech giant Google? It’s definitely a massive blow to the company. They’ve been trying to get a head start in the AI race but one thing or the other just brings them down. Meanwhile, the most innovative and powerful variant dubbed Gemini Ultra will be launched next year. So that means the tech giant is lagging behind in the AI race, as far as performance is concerned.

Read next: College Degrees May Lose Their Worth Soon In this Era of AI