Apple is undoubtedly an unmatched rival in the world of technology and innovation. And thanks to its latest research, which many feel is groundbreaking, we could soon see it at the forefront of AI.

The iPhone maker just made some major revelations in AI research, thanks to the publication of two new studies. They both rolled out new methods for 3D avatars as well as the right kind of language model inferences. Such advancements would give rise to limitless possibilities as well as complex systems in use that continue to function on top-of-the-line iPhones and iPads.

Photo: DIW-AI-gen

Photo: DIW-AI-gen

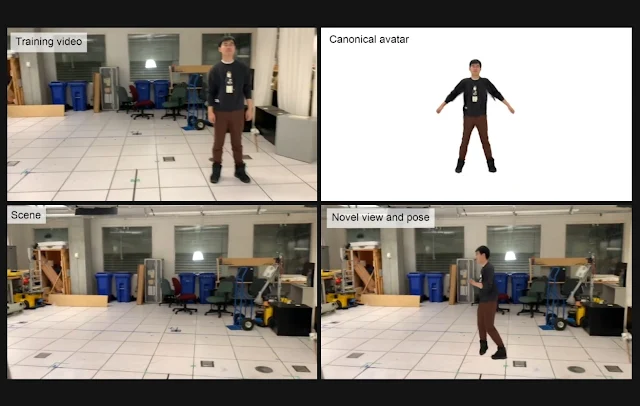

In the first study of its kind, the authors have proposed a breakthrough means to produce 3D avatars via the likes of short videos, including those made via a single camera. Dubbed mononuclear videos, these are made with a small number of frames and they can disentangle scenes while generating an animated version of a human avatar in the shortest duration possible which is 30 minutes.

HUGS makes use of 3D Gaussian splatting technology whereby human models are produced through SMPI or static body shape molds. The latter enables intricate details to be captured such as clothes and hair. Meanwhile, a unique neural deformation system produces the results more realistically through blend skinning.

As a whole, it’s a very coordinated movement that prevents artifacts and gives rise to the reposting of the character or avatar. So what you get is a one-of-a-kind production of the scene at large and the human linked to it.

When you compare this to avatar creation techniques, it’s faster and renders results quicker with limited training. In the end, the results are amazing and very photorealistic optimizing the system for just half an hour on classic GPUs used for the likes of gaming.

Research has also proven how it gives rise to results that surpass those seen with Vid2Avatar and even Neuman. In the end, it’s 3D modeling capabilities that are so impressive, thanks to researchers from iPhone maker Apple.

Such studies are unlocking the great potential of AI technology for creating avatars for the future too. Just the thought of designing all of this through a click of a button on your phone’s camera is mindblowing, authors concluded.

Photo: Apple

On the other hand, the second paper speaks about researchers at Apple tackling a host of challenges where LLMs are used across devices having a limited amount of space and memory. Today, top-of-the-line models like OpenAI’s GPT-4 give rise to inference that’s done across expensive hardware technology.

Such proposals limit data transfer during the inference phase. But seeing Apple carry out the construction of a model that’s in tune with flash memory is remarkable. It provides guidance in two leading areas and would limit data transfer via flash and also reduce reading the material in giant chunks.

This has to do with two processes dubbed Windowing and Row Column Bundling. The former makes utilizes activations again and the latter stores rows with columns for bigger data block reads.

Such revolutionary advancements are a huge deal and essential when it comes to using LLMs in places where you’re limited with resources. This would expand the use and how accessible they can be, the authors added. And before you know it, this sort of optimization would give rise to complicated AI assistants that smoothly run across iPhones, not to mention iPads and more Apple products.

To sum it up, both researchers are proof of how much Apple continues to lead the pack in the world of AI research and related apps. It’s a promising endeavor but critics are worried about how much care will be required, alongside great responsibility when using the technology for a wide range of products.

The impacts it can have on society need to be considered as well, and then of course the matter of safeguarding privacy needs to be evaluated further.

When and if done correctly, we can call this as a revolution on its own and Apple really must be credited for trying to take the realms of AI to the next level.

Read next: MKBHD Has Shared the List of Awards Given to Mobile Phones in 2023 on the Basis of Their Performance

The iPhone maker just made some major revelations in AI research, thanks to the publication of two new studies. They both rolled out new methods for 3D avatars as well as the right kind of language model inferences. Such advancements would give rise to limitless possibilities as well as complex systems in use that continue to function on top-of-the-line iPhones and iPads.

Photo: DIW-AI-gen

Photo: DIW-AI-genIn the first study of its kind, the authors have proposed a breakthrough means to produce 3D avatars via the likes of short videos, including those made via a single camera. Dubbed mononuclear videos, these are made with a small number of frames and they can disentangle scenes while generating an animated version of a human avatar in the shortest duration possible which is 30 minutes.

HUGS makes use of 3D Gaussian splatting technology whereby human models are produced through SMPI or static body shape molds. The latter enables intricate details to be captured such as clothes and hair. Meanwhile, a unique neural deformation system produces the results more realistically through blend skinning.

As a whole, it’s a very coordinated movement that prevents artifacts and gives rise to the reposting of the character or avatar. So what you get is a one-of-a-kind production of the scene at large and the human linked to it.

When you compare this to avatar creation techniques, it’s faster and renders results quicker with limited training. In the end, the results are amazing and very photorealistic optimizing the system for just half an hour on classic GPUs used for the likes of gaming.

Research has also proven how it gives rise to results that surpass those seen with Vid2Avatar and even Neuman. In the end, it’s 3D modeling capabilities that are so impressive, thanks to researchers from iPhone maker Apple.

Such studies are unlocking the great potential of AI technology for creating avatars for the future too. Just the thought of designing all of this through a click of a button on your phone’s camera is mindblowing, authors concluded.

Photo: Apple

On the other hand, the second paper speaks about researchers at Apple tackling a host of challenges where LLMs are used across devices having a limited amount of space and memory. Today, top-of-the-line models like OpenAI’s GPT-4 give rise to inference that’s done across expensive hardware technology.

Such proposals limit data transfer during the inference phase. But seeing Apple carry out the construction of a model that’s in tune with flash memory is remarkable. It provides guidance in two leading areas and would limit data transfer via flash and also reduce reading the material in giant chunks.

This has to do with two processes dubbed Windowing and Row Column Bundling. The former makes utilizes activations again and the latter stores rows with columns for bigger data block reads.

Such revolutionary advancements are a huge deal and essential when it comes to using LLMs in places where you’re limited with resources. This would expand the use and how accessible they can be, the authors added. And before you know it, this sort of optimization would give rise to complicated AI assistants that smoothly run across iPhones, not to mention iPads and more Apple products.

To sum it up, both researchers are proof of how much Apple continues to lead the pack in the world of AI research and related apps. It’s a promising endeavor but critics are worried about how much care will be required, alongside great responsibility when using the technology for a wide range of products.

The impacts it can have on society need to be considered as well, and then of course the matter of safeguarding privacy needs to be evaluated further.

When and if done correctly, we can call this as a revolution on its own and Apple really must be credited for trying to take the realms of AI to the next level.

Read next: MKBHD Has Shared the List of Awards Given to Mobile Phones in 2023 on the Basis of Their Performance