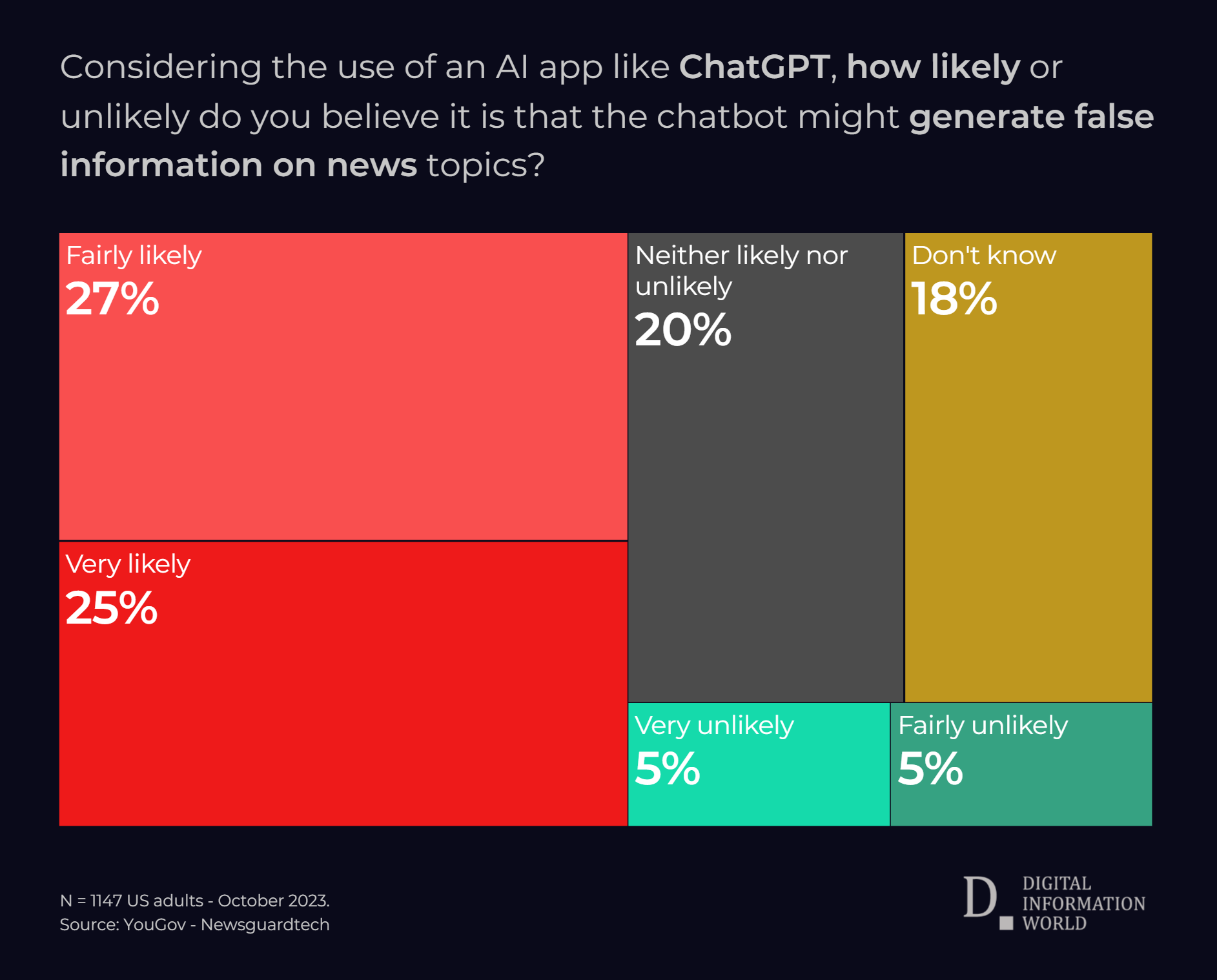

Generative AI has the ability to completely change the world, but in spite of the fact that this is the case, many are worried about its ability to create and spread misinformation. A survey conducted by YouGov on behalf of NewsGuard revealed that as many as 52% of Americans think its likely that AI will create misinformation in the news, with just 10% saying that the chances of this happening are low.

With all of that having been said and now out of the way, it is important to note that generative models have a tendency to hallucinate. What this basically means is that the chat bot will generate information based on prompts regardless of whether or not it is factual. This doesn’t happen all the time, but it occurs often enough that content produced by AI can’t always be trusted, and it is more likely to contain false news than might have been the case otherwise.

When researcher tested GPT 3.5, it found that ChatGPT provided misinformation in 80% of cases. The prompts it received were specifically engineered to find out if the chatbot was prone to misinformation, and it turned out that the vast majority of cases led to it failing the test.

There is a good chance that people will start using generative AI as their primary news source because of the fact that this is the sort of thing that could potentially end up providing instant answers. However, with so much misinformation being fed into its databases, the risks will become increasingly difficult to ignore.

Election misinformation, conspiracy theories and other forms of false narratives are common in ChatGPT. In one example, researchers successfully made the model parrot a conspiracy theory about the Sandy Hook mass shooting, with the chatbot saying that it was staged. Similarly, Google Bard stated that the shooting at the Orlando Pulse club was a false flag operation, and both of these conspiracy theories are commonplace among the far right.

It is imperative that the companies behind these chatbots take steps to prevent them from spreading conspiracy theories and other misinformation that can cause tremendous harm. Until that happens, the dangers that they can create will start to compound in the next few years.

Read next: Global Surge in Interest - Searches for 'AI Job Displacement' Skyrocketed by 304% Over the Past Year,

With all of that having been said and now out of the way, it is important to note that generative models have a tendency to hallucinate. What this basically means is that the chat bot will generate information based on prompts regardless of whether or not it is factual. This doesn’t happen all the time, but it occurs often enough that content produced by AI can’t always be trusted, and it is more likely to contain false news than might have been the case otherwise.

When researcher tested GPT 3.5, it found that ChatGPT provided misinformation in 80% of cases. The prompts it received were specifically engineered to find out if the chatbot was prone to misinformation, and it turned out that the vast majority of cases led to it failing the test.

There is a good chance that people will start using generative AI as their primary news source because of the fact that this is the sort of thing that could potentially end up providing instant answers. However, with so much misinformation being fed into its databases, the risks will become increasingly difficult to ignore.

Election misinformation, conspiracy theories and other forms of false narratives are common in ChatGPT. In one example, researchers successfully made the model parrot a conspiracy theory about the Sandy Hook mass shooting, with the chatbot saying that it was staged. Similarly, Google Bard stated that the shooting at the Orlando Pulse club was a false flag operation, and both of these conspiracy theories are commonplace among the far right.

It is imperative that the companies behind these chatbots take steps to prevent them from spreading conspiracy theories and other misinformation that can cause tremendous harm. Until that happens, the dangers that they can create will start to compound in the next few years.

Read next: Global Surge in Interest - Searches for 'AI Job Displacement' Skyrocketed by 304% Over the Past Year,