In a landmark development, the University of Kansas has unveiled an AI detection tool tailored to sniff out AI-written scientific papers with near-perfect precision. The detector, which excels at discerning authentic human work from AI compositions, emerges as a beacon of relief amidst widespread unease over AI's encroachment in academic writing.

Led by Professor Heather Desaire, the research team has pierced through the AI detection dilemma, specifically targeting scientific essays in the realm of chemistry. Unlike broader AI detectors that cast a wide net with moderate success, Desaire's tool is fine-tuned for better accuracy in the specialized field, showcasing remarkable results in tests against content from the American Chemical Society's journals.

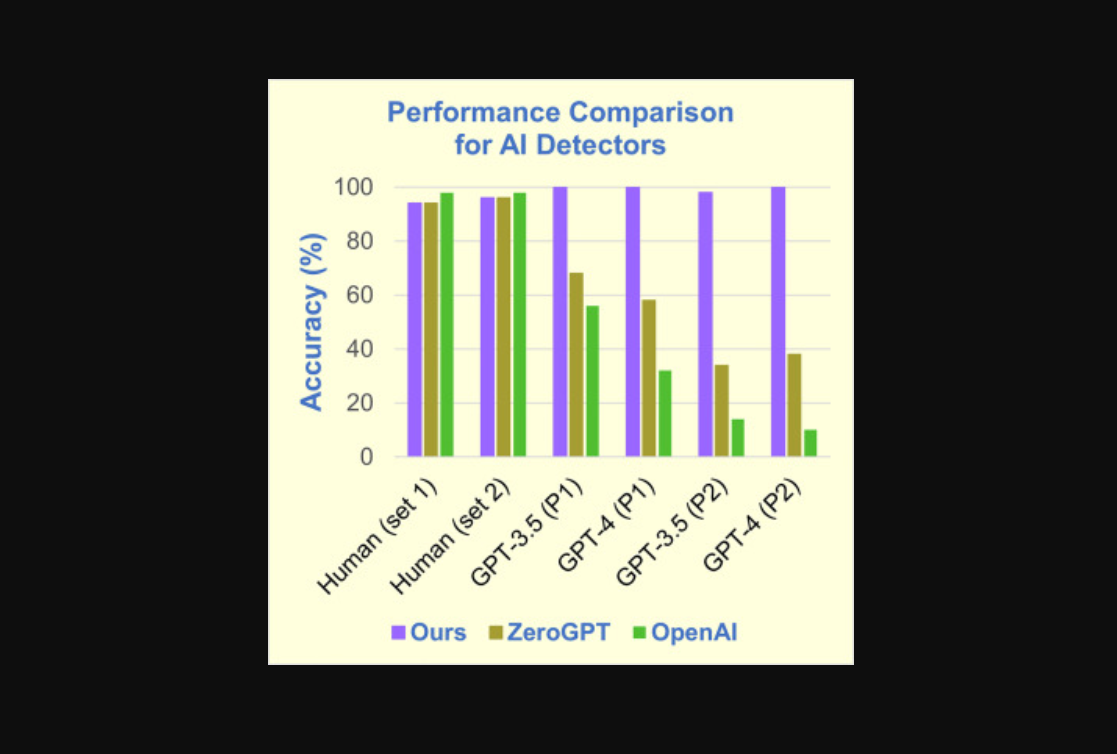

The detector's prowess was unmatched when pitted against human-written intros, achieving a flawless score in recognition. Its only slight stumble came with detecting introductions generated from ChatGPT, where it maintained a still-impressive 98% accuracy rate.

In stark contrast, broader AI classifiers like ZeroGPT lagged dramatically in this niche, demonstrating a mere 37% success rate, while another major player, OpenAI, misfired at a startling rate of 80%.

This breakthrough holds immense promise for academic integrity, as scholarly publications grapple with the AI text generator phenomenon. It marks a strategic move in safeguarding the quality of scientific discourse, ensuring that the innovative yet fallible AI tools don't dilute the literature with fabricated or low-value content.

Desaire, while pointing out the risks of AI-generated falsehoods seeping into academia, also shared a humorous yet cautionary tale of a ChatGPT-authored biography that got her credentials entirely wrong.

However, she stands hopeful, countering the defeatist view that AI is an unstoppable force. She advocates for proactive editorial measures and believes that this technological advance signifies a winnable battle in maintaining the sanctity of scientific literature.

Read next: Could These Scientific Tools Revolutionize Social Media Content Moderation?

Led by Professor Heather Desaire, the research team has pierced through the AI detection dilemma, specifically targeting scientific essays in the realm of chemistry. Unlike broader AI detectors that cast a wide net with moderate success, Desaire's tool is fine-tuned for better accuracy in the specialized field, showcasing remarkable results in tests against content from the American Chemical Society's journals.

The detector's prowess was unmatched when pitted against human-written intros, achieving a flawless score in recognition. Its only slight stumble came with detecting introductions generated from ChatGPT, where it maintained a still-impressive 98% accuracy rate.

In stark contrast, broader AI classifiers like ZeroGPT lagged dramatically in this niche, demonstrating a mere 37% success rate, while another major player, OpenAI, misfired at a startling rate of 80%.

This breakthrough holds immense promise for academic integrity, as scholarly publications grapple with the AI text generator phenomenon. It marks a strategic move in safeguarding the quality of scientific discourse, ensuring that the innovative yet fallible AI tools don't dilute the literature with fabricated or low-value content.

Desaire, while pointing out the risks of AI-generated falsehoods seeping into academia, also shared a humorous yet cautionary tale of a ChatGPT-authored biography that got her credentials entirely wrong.

However, she stands hopeful, countering the defeatist view that AI is an unstoppable force. She advocates for proactive editorial measures and believes that this technological advance signifies a winnable battle in maintaining the sanctity of scientific literature.

Read next: Could These Scientific Tools Revolutionize Social Media Content Moderation?