Last week we saw computational giant Microsoft reveal that its Bing AI was getting upgraded in terms of image creation, among other massive improvements.

And now, we’re hearing more on this front including how Microsoft’s step forward in this regard might actually turn out to be a massive step back.

For those who don’t know, the image creation system by Bing was revamped to a different variant that was hailed as being more impactful. This Dall-E 3 started to produce a lot of traffic and that’s why the company highlighted that it could be very sluggish at the start.

Meanwhile, another main issue was discussed linked to the Dall-E 3 whereby Microsoft has intervened further since the new upgrade came into being.

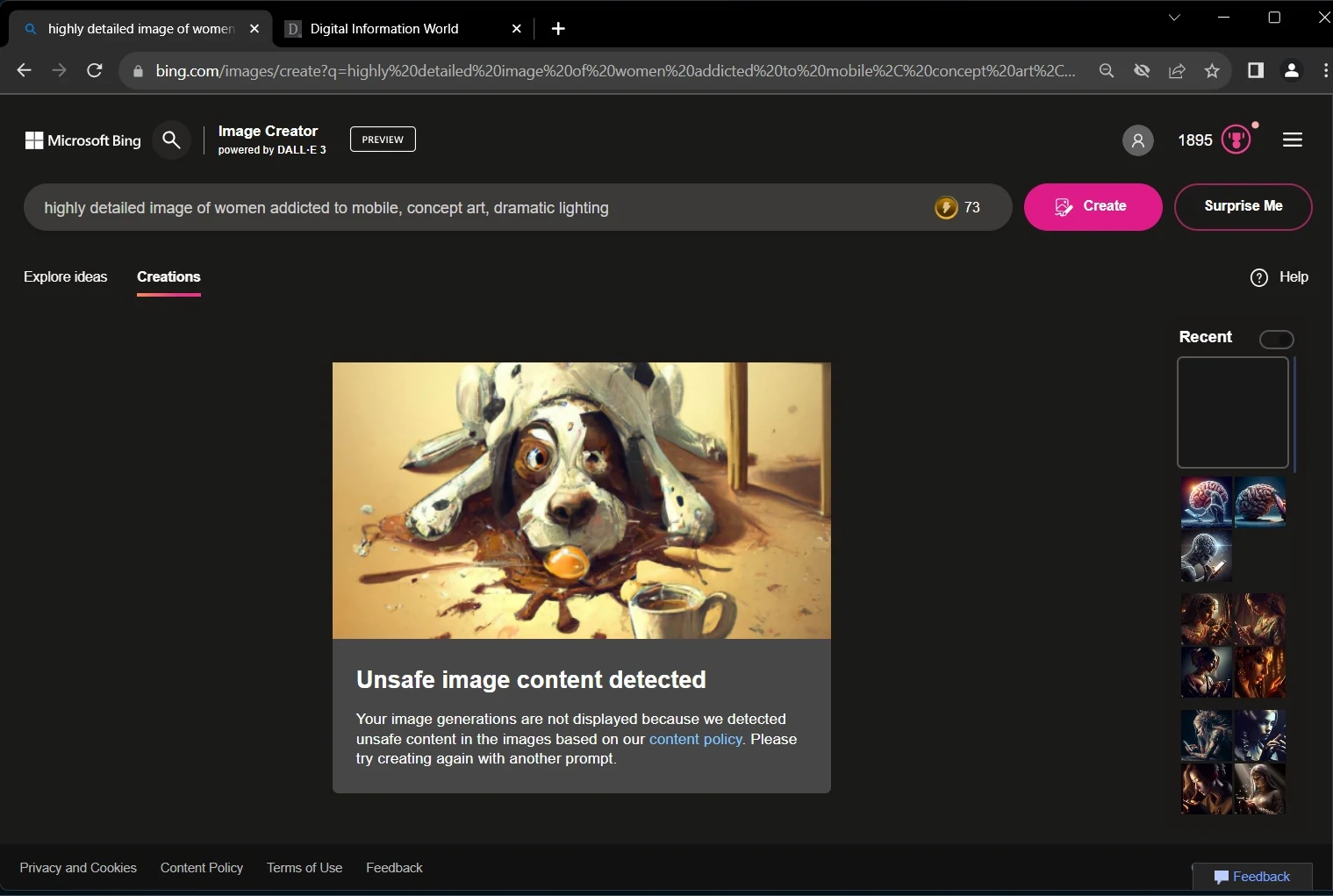

Therefore, we’re being told the latest update on this front has to do with the tool getting its own system for content moderation that prevents inappropriate pictures from getting presented. While that might sound helpful at first, the level of censorship put forward is more stringent than what users may have expected.

And we suspect that might have to do with the fact that it’s a type of reaction to the content that’s in demand by AI users on Bing. The perfect example here has to do with a controversial picture produced featuring Mickey Mouse laying down an entire 9/11 attack.

Now, that makes sense why people were worried but the issue goes beyond this form of extreme. Moreover, users are searching for reasons why their requests to produce a certain image are not getting approval. In the end, people are just not happy because when you ask a chatbot to do something, the least you expect is a positive response.

However, the opposite is happening here.

In the past week, we saw Bing AI not putting out complaints regarding the matter when people were asking the chatbot to produce violent images using Bing AI. This included some popular characters and still, Bing AI failed to raise any issue over the matter.

So as you can imagine, the point worth mentioning is how censorship in this matter might turn out to be a huge overreaction. Software giant Microsoft is just too laid back in the starting implementation but now, it’s really overreacting and people are having trouble with what in the world is going on.

Another issue is how this Bing AI is ending up adding censors on itself as mentioned by one Reddit user. There’s even a new button that says Suprise Me. It will produce a random picture. Whatever the case may be, producing a new picture and then opting to censor that, there and then is annoying.

Has Microsoft gone too far in this case? Well, experts certainly think so. It’s not something that many fans are hoping for and it’s about time the company takes action before it’s too late.

Read next: PC Shipments Decline, But Gartner Sees Signs of Recovery

And now, we’re hearing more on this front including how Microsoft’s step forward in this regard might actually turn out to be a massive step back.

For those who don’t know, the image creation system by Bing was revamped to a different variant that was hailed as being more impactful. This Dall-E 3 started to produce a lot of traffic and that’s why the company highlighted that it could be very sluggish at the start.

Meanwhile, another main issue was discussed linked to the Dall-E 3 whereby Microsoft has intervened further since the new upgrade came into being.

Therefore, we’re being told the latest update on this front has to do with the tool getting its own system for content moderation that prevents inappropriate pictures from getting presented. While that might sound helpful at first, the level of censorship put forward is more stringent than what users may have expected.

And we suspect that might have to do with the fact that it’s a type of reaction to the content that’s in demand by AI users on Bing. The perfect example here has to do with a controversial picture produced featuring Mickey Mouse laying down an entire 9/11 attack.

Now, that makes sense why people were worried but the issue goes beyond this form of extreme. Moreover, users are searching for reasons why their requests to produce a certain image are not getting approval. In the end, people are just not happy because when you ask a chatbot to do something, the least you expect is a positive response.

However, the opposite is happening here.

In the past week, we saw Bing AI not putting out complaints regarding the matter when people were asking the chatbot to produce violent images using Bing AI. This included some popular characters and still, Bing AI failed to raise any issue over the matter.

So as you can imagine, the point worth mentioning is how censorship in this matter might turn out to be a huge overreaction. Software giant Microsoft is just too laid back in the starting implementation but now, it’s really overreacting and people are having trouble with what in the world is going on.

Another issue is how this Bing AI is ending up adding censors on itself as mentioned by one Reddit user. There’s even a new button that says Suprise Me. It will produce a random picture. Whatever the case may be, producing a new picture and then opting to censor that, there and then is annoying.

Has Microsoft gone too far in this case? Well, experts certainly think so. It’s not something that many fans are hoping for and it’s about time the company takes action before it’s too late.

Read next: PC Shipments Decline, But Gartner Sees Signs of Recovery