Deepfakes were perhaps the first example of AI’s true capabilities, and they’ve only gotten better over the years. While the first few deepfakes that made the rounds were relatively light-hearted, such as the many videos where people posed as Tom Cruise, it didn’t take long for the more sinister aspects of the tech to come to light.

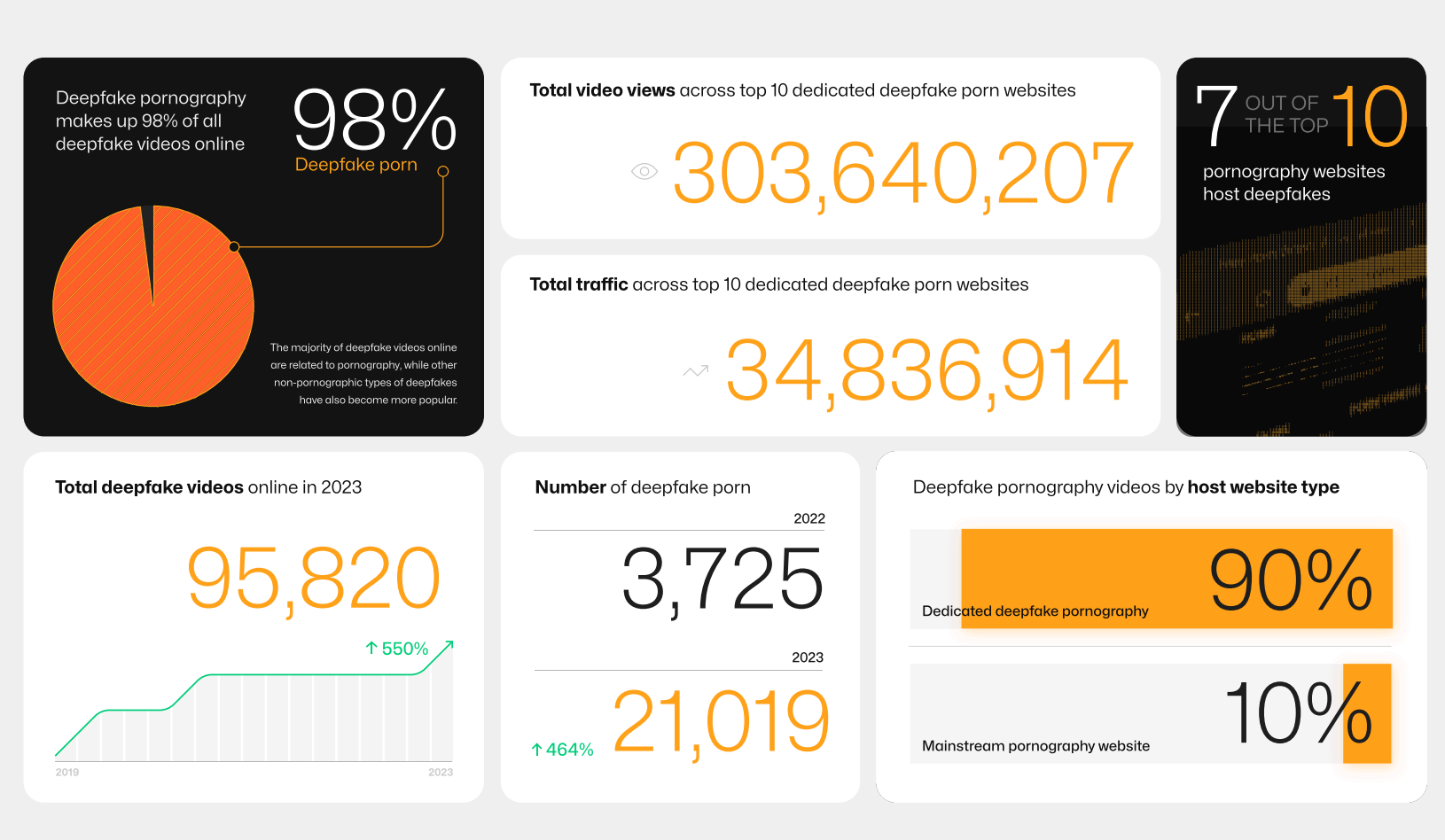

Their usefulness in political disinformation, or misinformation in general, has already made them a contentious topic. However, the real danger of deepfakes actually lies elsewhere. The 2023 State of Deepfakes by Home Security Heroes revealed that 98% of all deepfakes end up in one specific genre: explicit content.

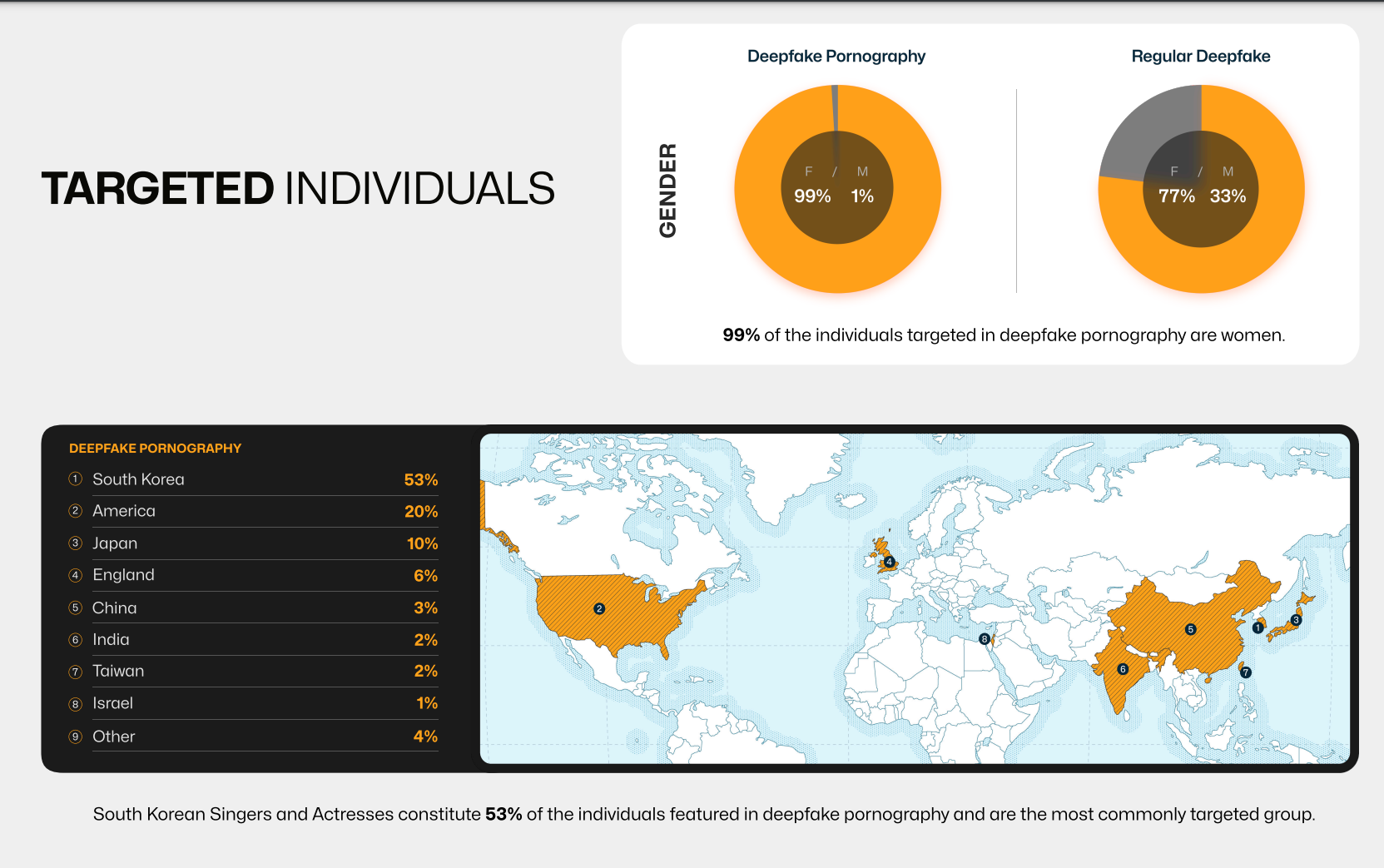

It’s unsurprising that technology that can flawlessly mimic someone’s appearance is used to make adult videos, but the fact the vast majority of deepfakes are used here is a cause for concern. 98% of the people whose likenesses are used in explicit deepfakes are women, but 48% of viewers are men. More importantly, 74% of men stated that they don’t see anything wrong with explicit deepfakes, or at least they see no reason to feel guilty about it.

Another alarming statistic from this report: there has been a 550% increase in the number of deepfakes online between 2019 and 2023, with all but a few being used in the adult content industry. Sites specializing in explicit deepfakes received just under 5 million unique visitors so far this year, but 7 out of 10 of the most popular adult video streaming sites featured them as well.

If this was simply a question of adult actresses being replaced by AI, this would be a different debate. Of course, most explicit deepfakes don’t involve consent. Countless women have been blackmailed with deepfakes portraying them in a variety of compromising scenarios, and many of these cases can be seen as a form of assault. Minors aren’t safe from deepfakes either, with a recent case in Spain involving 30 young girls all of whom were just 12 to 14 years old.

With women being the main victims, and nearly 3 out of 4 men failing to see how unethical deepfakes are, it’s more important than ever that governments pass regulations to mitigate the massive psychological damage they can cause. Unfortunately, hardly any states have passed laws regarding deepfakes. Fewer still have labelled creating an explicit deepfake without consent a criminal offense, forcing many victims to settle cases in civil court.

Read next: When AI Gets Too Friendly: CEO Altman's Worries About AI Companions

Their usefulness in political disinformation, or misinformation in general, has already made them a contentious topic. However, the real danger of deepfakes actually lies elsewhere. The 2023 State of Deepfakes by Home Security Heroes revealed that 98% of all deepfakes end up in one specific genre: explicit content.

It’s unsurprising that technology that can flawlessly mimic someone’s appearance is used to make adult videos, but the fact the vast majority of deepfakes are used here is a cause for concern. 98% of the people whose likenesses are used in explicit deepfakes are women, but 48% of viewers are men. More importantly, 74% of men stated that they don’t see anything wrong with explicit deepfakes, or at least they see no reason to feel guilty about it.

Another alarming statistic from this report: there has been a 550% increase in the number of deepfakes online between 2019 and 2023, with all but a few being used in the adult content industry. Sites specializing in explicit deepfakes received just under 5 million unique visitors so far this year, but 7 out of 10 of the most popular adult video streaming sites featured them as well.

If this was simply a question of adult actresses being replaced by AI, this would be a different debate. Of course, most explicit deepfakes don’t involve consent. Countless women have been blackmailed with deepfakes portraying them in a variety of compromising scenarios, and many of these cases can be seen as a form of assault. Minors aren’t safe from deepfakes either, with a recent case in Spain involving 30 young girls all of whom were just 12 to 14 years old.

With women being the main victims, and nearly 3 out of 4 men failing to see how unethical deepfakes are, it’s more important than ever that governments pass regulations to mitigate the massive psychological damage they can cause. Unfortunately, hardly any states have passed laws regarding deepfakes. Fewer still have labelled creating an explicit deepfake without consent a criminal offense, forcing many victims to settle cases in civil court.

Read next: When AI Gets Too Friendly: CEO Altman's Worries About AI Companions