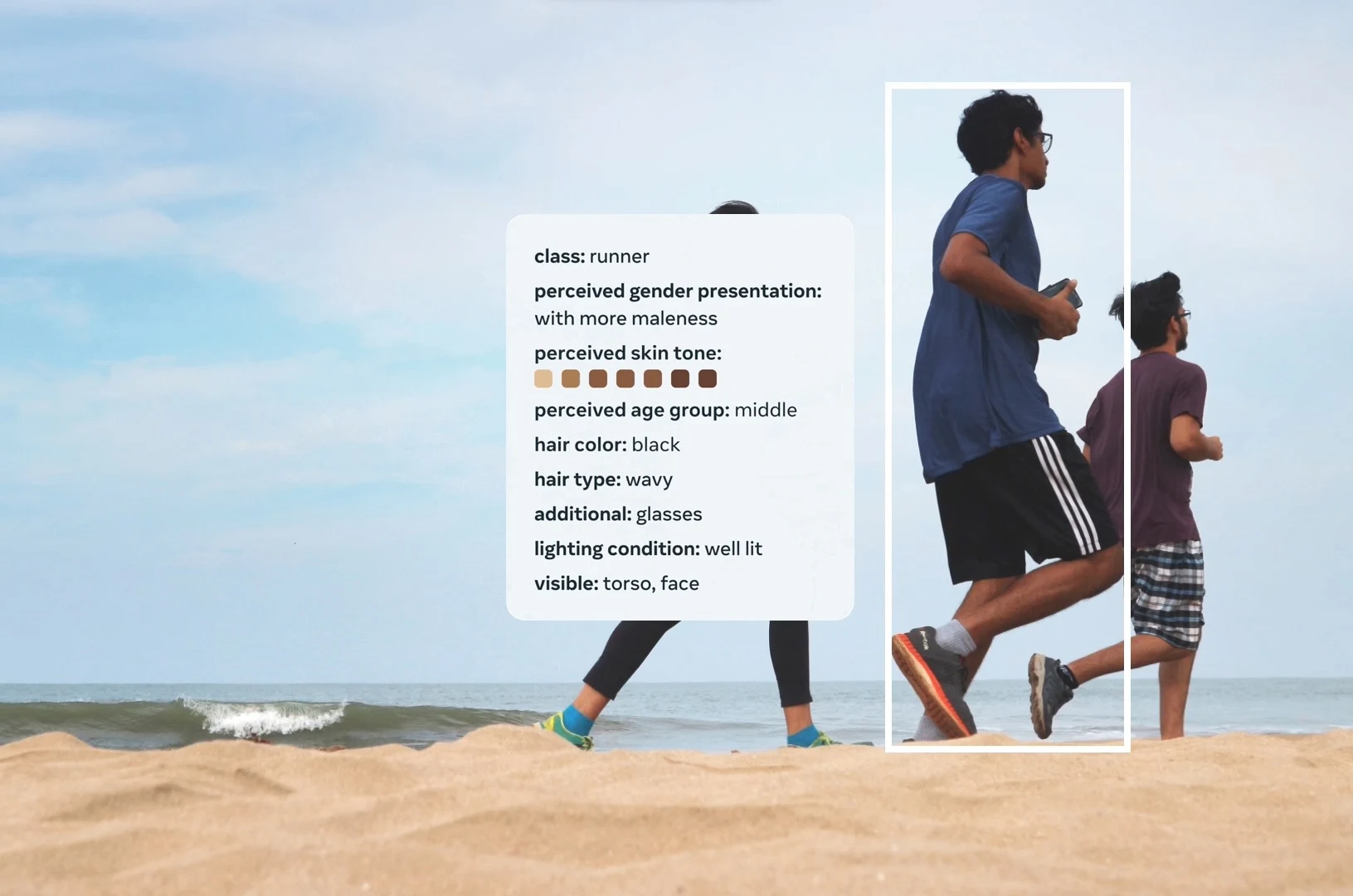

To promote greater fairness and inclusivity in the world of artificial intelligence, Meta (formerly known as Facebook) has unveiled a powerful new resource - the FACET dataset. FACET, which stands for FAirness in Computer Vision EvaluaTion, consists of a robust collection of 32,000 images meticulously labeled by human assessors. In order to ensure that AI systems can recognize and account for a wide range of demographic variables, from gender and skin tone to hairdo and beyond, this dataset is positioned to play a significant role.

The need to eliminate biases and errors that have historically been present in AI models motivates this project. Meta seeks to enable AI developers to design models that are more egalitarian and representative of varied populations by offering a comprehensive database of photos assessed for various demographic attributes.

One of the primary motivations behind this effort is the recognition that AI systems can sometimes exhibit performance disparities across different demographic groups. For example, they may struggle to accurately detect individuals with darker skin tones or coily hair. These disparities can lead to unequal user experiences, disproportionately affecting specific communities.

Meta aims to address these issues using the FACET dataset. This resource can aid in closing the fairness gap by giving AI developers a more comprehensive range of demographic criteria to take into account. It makes it possible for engineers to take into account a greater variety of factors, resulting in AI systems that function more reliably across various populations.

According to Meta, evaluating fairness in computer vision is a challenging task. Individuals must be reliably recognized and categorized by AI systems regardless of their physical traits. Researchers and practitioners can assess the fairness and robustness of their inventions using the FACET dataset, which provides a thorough standard for evaluating AI models in this situation.

Importantly, Meta emphasizes that FACET is intended solely for research and evaluation purposes. It is not designed to train AI models but to serve as a valuable resource for benchmarking fairness. The ultimate goal is for FACET to become a standard benchmark in the field of computer vision, enabling developers to assess fairness across a broader set of demographic attributes.

In conclusion, Meta's FACET dataset marks a substantial advancement toward enhancing justice and representation in the field of artificial intelligence. Meta aims to motivate academics and developers to produce AI models that perform consistently and fairly across all demographics by offering a broad and properly labeled dataset. By removing biases in current AI systems, this program has the potential to significantly advance AI technology and create more inclusive and egalitarian AI tools.

Read next: OpenAI Cautions Teachers About AI Detection Tools

The need to eliminate biases and errors that have historically been present in AI models motivates this project. Meta seeks to enable AI developers to design models that are more egalitarian and representative of varied populations by offering a comprehensive database of photos assessed for various demographic attributes.

One of the primary motivations behind this effort is the recognition that AI systems can sometimes exhibit performance disparities across different demographic groups. For example, they may struggle to accurately detect individuals with darker skin tones or coily hair. These disparities can lead to unequal user experiences, disproportionately affecting specific communities.

Meta aims to address these issues using the FACET dataset. This resource can aid in closing the fairness gap by giving AI developers a more comprehensive range of demographic criteria to take into account. It makes it possible for engineers to take into account a greater variety of factors, resulting in AI systems that function more reliably across various populations.

According to Meta, evaluating fairness in computer vision is a challenging task. Individuals must be reliably recognized and categorized by AI systems regardless of their physical traits. Researchers and practitioners can assess the fairness and robustness of their inventions using the FACET dataset, which provides a thorough standard for evaluating AI models in this situation.

Importantly, Meta emphasizes that FACET is intended solely for research and evaluation purposes. It is not designed to train AI models but to serve as a valuable resource for benchmarking fairness. The ultimate goal is for FACET to become a standard benchmark in the field of computer vision, enabling developers to assess fairness across a broader set of demographic attributes.

In conclusion, Meta's FACET dataset marks a substantial advancement toward enhancing justice and representation in the field of artificial intelligence. Meta aims to motivate academics and developers to produce AI models that perform consistently and fairly across all demographics by offering a broad and properly labeled dataset. By removing biases in current AI systems, this program has the potential to significantly advance AI technology and create more inclusive and egalitarian AI tools.

Read next: OpenAI Cautions Teachers About AI Detection Tools