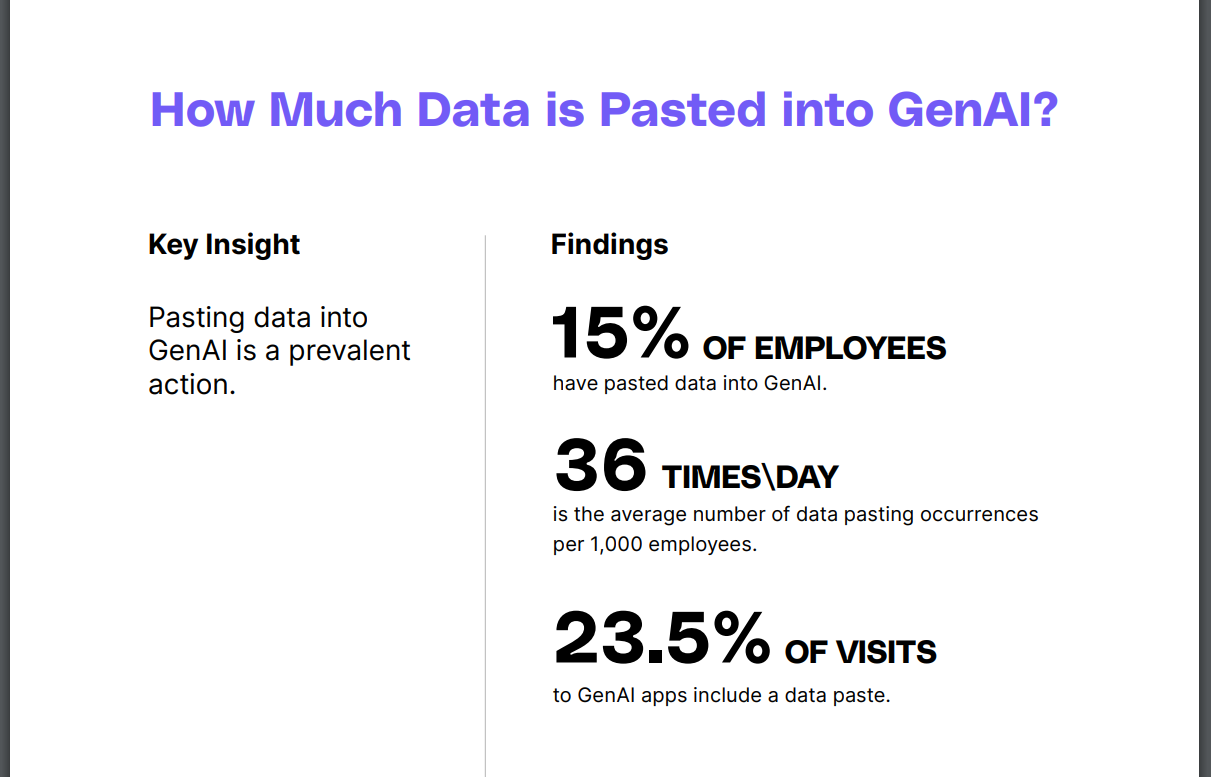

ChatGPT has become a core component of getting work done for a number of employees around the world, but in spite of the fact that this is the case, you can’t consider the data it receives to be safe. A recent report surveyed around 100,000 employees, and it turns out that 15% of them use ChatGPT regularly thereby giving it a vast quantity of data pertaining to their employers.

With all of that having been said and now out of the way, it is important to note that 6% of the data that they are sending to ChatGPT can be considered sensitive in nature. Doing so can be dangerous because of the fact that this is the sort of thing that could potentially end up adding this data to ChatGPT’s own database, thereby rendering it far less secure than might have been the case otherwise.

43% of the sensitive data that is being inadvertently sent to AI tools has to do with internal business information, 31% of it consists of source code, and 12% is the extremely sensitive personally identifiable data subset with all things having been considered and taken into account.

This could very well lead to competitors or even malicious actors getting their hands on this data, and if this were to occur, the companies that lost this data would not even realize it until it is too late. 44% of workers are now using ChatGPT at least once over the past 3 months, and some of them are visiting this site or other ones like it upwards of 50 times on a monthly basis.

4% of overall employees are actively pasting sensitive data right into ChatGPT each and every week, with 50% of these workers being employed by Research and Development departments at their current employers. 23% were from Sales and Marketing departments, and 14% belonged to departments that managed financial affairs.

Employees must be educated on the risks of doing so, since it can greatly increase the likelihood of cyberattacks. It could also lead to corporate espionage which can have a wider ripple effect on the entire business community.

Read next: Meta’s Top AI Scientist Says AI Systems’ Intelligence Isn’t At Par With Dogs As It Can’t Comprehend The Reality Of The Real World

With all of that having been said and now out of the way, it is important to note that 6% of the data that they are sending to ChatGPT can be considered sensitive in nature. Doing so can be dangerous because of the fact that this is the sort of thing that could potentially end up adding this data to ChatGPT’s own database, thereby rendering it far less secure than might have been the case otherwise.

43% of the sensitive data that is being inadvertently sent to AI tools has to do with internal business information, 31% of it consists of source code, and 12% is the extremely sensitive personally identifiable data subset with all things having been considered and taken into account.

This could very well lead to competitors or even malicious actors getting their hands on this data, and if this were to occur, the companies that lost this data would not even realize it until it is too late. 44% of workers are now using ChatGPT at least once over the past 3 months, and some of them are visiting this site or other ones like it upwards of 50 times on a monthly basis.

4% of overall employees are actively pasting sensitive data right into ChatGPT each and every week, with 50% of these workers being employed by Research and Development departments at their current employers. 23% were from Sales and Marketing departments, and 14% belonged to departments that managed financial affairs.

Employees must be educated on the risks of doing so, since it can greatly increase the likelihood of cyberattacks. It could also lead to corporate espionage which can have a wider ripple effect on the entire business community.

Read next: Meta’s Top AI Scientist Says AI Systems’ Intelligence Isn’t At Par With Dogs As It Can’t Comprehend The Reality Of The Real World